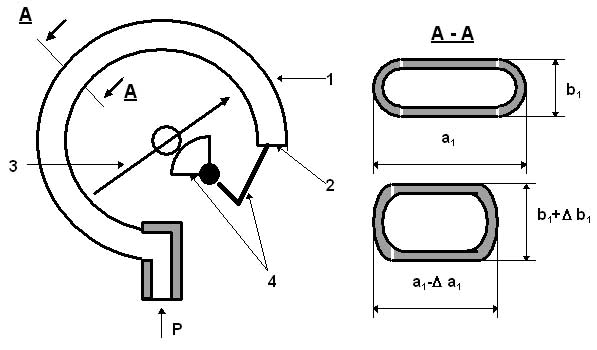

The most widely used in industry for pressure and vacuum measurements (from 20 kPa to 1000 MPa) is a pressure gauge with sensitive element made of a metallic (various stainless-steel alloys, phosphor bronze, brass, beryllium copper, Monel, etc.) Bourdon tube 1 (see Figure 1). The tube was named after its inventor, E. Bourdon, who patented his invention in 1852. This tube has an elliptical or oval cross-section A-A and has the shape of a bended tube. When the pressure inside the tube 1 increases, its cross-section dimension b1 also increases by the value of ∆b1, whereas the cross-section dimension a1 reduces its length by the value of ∆a1.

Therefore, the tube tends to straighten (if pressure has increased) or twist (if pressure has decreased, for example, during vacuum measurements), and the tip 2 of the tube moves linearly with applied pressure. The movement of the tip is transmitted to the pointer 3 through a mechanism 4. The tube tends to return to its original shape (the pointer returns to the starting position) after pressure is removed. A relationship between the value of the tip movement ∆x and the measured pressure is linear, so the scale of this pressure gauge is uniform.

Some degree of hysteresis still exists during operation of these pressure gages, because metals cannot fully restore their initial elastic properties. If we have two Bourdon tubes made of the same metal, the tube with a bigger radius and a smaller thickness of the wall will have higher sensitivity. An accuracy of a typical Bourdon-tube pressure gauge is equal to ±1%, whereas a specially designed gauge may have better accuracy which varies from ±0.25 to ±0.5%.