There are two ways of stating measurement error and uncertainty for the entire range of measuring instrument.

• Percent of full scale deflection or FSD

• Percent of reading or indicated value

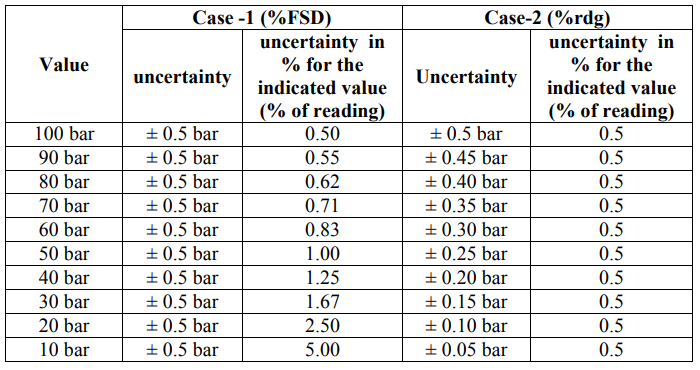

The difference between the two concepts becomes highly significant when an instrument is operating near the bottom of its turn down range. The following example will show the difference between the two.

Assume you have a 100 bar pressure gauge (Maximum), and that the stated uncertainty as:

Case-1:

At 100 bar ± 0.5%FSD uncertainty is = 0.5 bar for the entire range. This represents the “best case” uncertainty of the measurement. However, when a lower range is utilized this 0.5 bar becomes more significant.

Case-2:

At 100 bar ± 0.5% of reading the uncertainty is = 0.5 bar.

The following table explains the difference

Therefore, what looks to be a good uncertainty reading at full scale actually translates into substantially more % uncertainty at the lower range of the tester with case-1.

As can be seen by the above cases, uncertainty as related to full scale value increases significantly as you go lower in the range, while % uncertainty related to indicated value stays constant throughout the useful range of the UUT.

The above explanation holds good for the error of the equipment expressed in % of reading and % FSD also.

Hence, care to be taken in selecting the right equipment used for calibration in the laboratory and also while issuing calibration report for the calibrated equipment.

Equipments with %FSD

Generally, when manufacturer declares % FSD, their useful range will be from zero to full scale i.e. for example ± 0.5% FSD from 0 to 100 bar, this is interesting because at 0 bar the system is only accurate to within ± 0.5 bar. Basically the error goes to infinity at zero.